16 KiB

Human Library

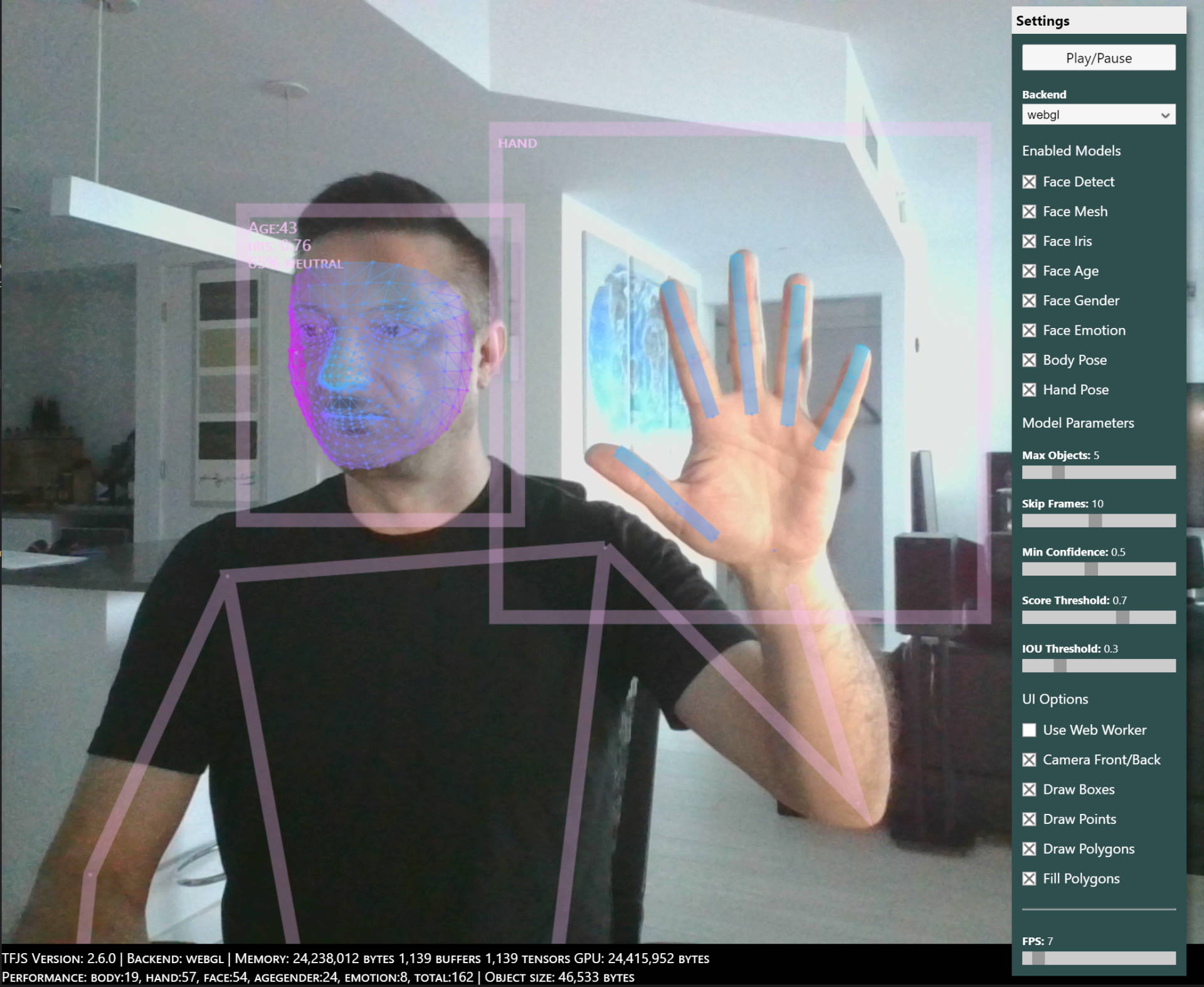

3D Face Detection, Body Pose, Hand & Finger Tracking, Iris Tracking, Age & Gender Prediction & Emotion Prediction

Compatible with Browser, WebWorker and NodeJS execution!

This is a pre-release project, see issues for list of known limitations

Suggestions are welcome!

Examples

Installation

Important

The packaged (IIFE and ESM) version of Human includes TensorFlow/JS (TFJS) 2.6.0 library which can be accessed via human.tf

You should NOT manually load another instance of tfjs, but if you do, be aware of possible version conflicts

There are multiple ways to use Human library, pick one that suits you:

Included

dist/human.js: IIFE format minified bundle with TFJS for Browsersdist/human.esm.js: ESM format minified bundle with TFJS for Browsersdist/human.esm-nobundle.js: ESM format non-minified bundle without TFJS for Browsersdist/human.cjs: CommonJS format minified bundle with TFJS for NodeJSdist/human-nobundle.cjs: CommonJS format non-minified bundle without TFJS for NodeJS

All versions include sourcemap

Defaults:

{

"main": "dist/human.cjs",

"module": "dist/human.esm.js",

"browser": "dist/human.esm.js",

}

1. IIFE script

Simplest way for usage within Browser

Simply download dist/human.js, include it in your HTML file & it's ready to use.

<script src="dist/human.js"><script>

IIFE script auto-registers global namespace human within global Window object

This way you can also use Human library within embbedded <script> tag within your html page for all-in-one approach

2. ESM module

Recommended for usage within Browser

2.1 With Bundler

If you're using bundler (such as rollup, webpack, esbuild) to package your client application, you can import ESM version of Human library which supports full tree shaking

Install with:

npm install @vladmandic/human

import human from '@vladmandic/human'; // points to @vladmandic/human/dist/human.esm.js

Or if you prefer to package your version of tfjs, you can use nobundle version

Install with:

npm install @vladmandic/human @tensorflow/tfjs-node

import tf from '@tensorflow/tfjs'

import human from '@vladmandic/human/dist/human.esm-nobundle.js'; // same functionality as default import, but without tfjs bundled

2.2 Using Script Module

You could use same syntax within your main JS file if it's imported with <script type="module">

<script src="./index.js" type="module">

and then in your index.js

import * as tf from `https://cdnjs.cloudflare.com/ajax/libs/tensorflow/2.6.0/tf.es2017.min.js`; // load tfjs directly from CDN link

import human from 'dist/human.esm.js'; // for direct import must use path to module, not package name

3. NPM module

Recommended for NodeJS projects that will execute in the backend

Entry point is bundle in CJS format dist/human.node.js

You also need to install and include tfjs-node or tfjs-node-gpu in your project so it can register an optimized backend

Install with:

npm install @vladmandic/human

And then use with:

const human = require('@vladmandic/human'); // points to @vladmandic/human/dist/human.cjs

or

npm install @vladmandic/human @tensorflow/tfjs-node

And then use with:

const tf = require('@tensorflow/tfjs-node'); // can also use '@tensorflow/tfjs-node-gpu' if you have environment with CUDA extensions

const human = require('@vladmandic/human/dist/human-nobundle.cjs');

Since NodeJS projects load weights from local filesystem instead of using http calls, you must modify default configuration to include correct paths with file:// prefix

For example:

const config = {

body: { enabled: true, modelPath: 'file://models/posenet/model.json' },

}

Note that when using Human in NodeJS, you must load and parse the image before you pass it for detection

For example:

const buffer = fs.readFileSync(input);

const image = tf.node.decodeImage(buffer);

const result = human.detect(image, config);

image.dispose();

Weights

Pretrained model weights are includes in ./models

Default configuration uses relative paths to you entry script pointing to ../models

If your application resides in a different folder, modify modelPath property in configuration of each module

Demo

Demos are included in /demo:

Browser:

demo-esm: Full demo using Browser with ESM module, includes selectable backends and webworkersdemo-iife: Older demo using Browser with IIFE module

NodeJS:

demo-node: Demo using NodeJS with CJS module

This is a very simple demo as althoughtHumanlibrary is compatible with NodeJS execution

and is able to load images and models from local filesystem,

Usage

Human library does not require special initialization.

All configuration is done in a single JSON object and all model weights will be dynamically loaded upon their first usage(and only then, Human will not load weights that it doesn't need according to configuration).

There is only ONE method you need:

import * as tf from '@tensorflow/tfjs';

import human from '@vladmandic/human';

// 'image': can be of any type of an image object: HTMLImage, HTMLVideo, HTMLMedia, Canvas, Tensor4D

// 'options': optional parameter used to override any options present in default configuration

const result = await human.detect(image, options?)

or if you want to use promises

human.detect(image, options?).then((result) => {

// your code

})

Additionally, Human library exposes several classes:

human.config // access to configuration object, normally set as parameter to detect()

human.defaults // read-only view of default configuration object

human.models // dynamically maintained list of object of any loaded models

human.tf // instance of tfjs used by human

Configuration

Below is output of human.defaults object

Any property can be overriden by passing user object during human.detect()

Note that user object and default configuration are merged using deep-merge, so you do not need to redefine entire configuration

Configurtion object is large, but typically you only need to modify few values:

enabled: Choose which models to useskipFrames: Must be set to 0 for static imagesmodelPath: Update as needed to reflect your application's relative path

export default {

backend: 'webgl', // select tfjs backend to use

console: true, // enable debugging output to console

face: {

enabled: true, // controls if specified modul is enabled

// face.enabled is required for all face models: detector, mesh, iris, age, gender, emotion

// note: module is not loaded until it is required

detector: {

modelPath: '../models/blazeface/back/model.json', // can be 'tfhub', 'front' or 'back'.

// 'front' is optimized for large faces such as front-facing camera and 'back' is optimized for distanct faces.

inputSize: 256, // fixed value: 128 for front and 'tfhub' and 'front' and 256 for 'back'

maxFaces: 10, // maximum number of faces detected in the input, should be set to the minimum number for performance

skipFrames: 10, // how many frames to go without re-running the face bounding box detector

// if model is running st 25 FPS, we can re-use existing bounding box for updated face mesh analysis

// as face probably hasn't moved much in short time (10 * 1/25 = 0.25 sec)

minConfidence: 0.5, // threshold for discarding a prediction

iouThreshold: 0.3, // threshold for deciding whether boxes overlap too much in non-maximum suppression

scoreThreshold: 0.7, // threshold for deciding when to remove boxes based on score in non-maximum suppression

},

mesh: {

enabled: true,

modelPath: '../models/facemesh/model.json',

inputSize: 192, // fixed value

},

iris: {

enabled: true,

modelPath: '../models/iris/model.json',

enlargeFactor: 2.3, // empiric tuning

inputSize: 64, // fixed value

},

age: {

enabled: true,

modelPath: '../models/ssrnet-age/imdb/model.json', // can be 'imdb' or 'wiki'

// which determines training set for model

inputSize: 64, // fixed value

skipFrames: 10, // how many frames to go without re-running the detector

},

gender: {

enabled: true,

minConfidence: 0.8, // threshold for discarding a prediction

modelPath: '../models/ssrnet-gender/imdb/model.json',

},

emotion: {

enabled: true,

inputSize: 64, // fixed value

minConfidence: 0.5, // threshold for discarding a prediction

skipFrames: 10, // how many frames to go without re-running the detector

useGrayscale: true, // convert image to grayscale before prediction or use highest channel

modelPath: '../models/emotion/model.json',

},

},

body: {

enabled: true,

modelPath: '../models/posenet/model.json',

inputResolution: 257, // fixed value

outputStride: 16, // fixed value

maxDetections: 10, // maximum number of people detected in the input, should be set to the minimum number for performance

scoreThreshold: 0.7, // threshold for deciding when to remove boxes based on score in non-maximum suppression

nmsRadius: 20, // radius for deciding points are too close in non-maximum suppression

},

hand: {

enabled: true,

inputSize: 256, // fixed value

skipFrames: 10, // how many frames to go without re-running the hand bounding box detector

// if model is running st 25 FPS, we can re-use existing bounding box for updated hand skeleton analysis

// as face probably hasn't moved much in short time (10 * 1/25 = 0.25 sec)

minConfidence: 0.5, // threshold for discarding a prediction

iouThreshold: 0.3, // threshold for deciding whether boxes overlap too much in non-maximum suppression

scoreThreshold: 0.7, // threshold for deciding when to remove boxes based on score in non-maximum suppression

enlargeFactor: 1.65, // empiric tuning as skeleton prediction prefers hand box with some whitespace

maxHands: 10, // maximum number of hands detected in the input, should be set to the minimum number for performance

detector: {

anchors: '../models/handdetect/anchors.json',

modelPath: '../models/handdetect/model.json',

},

skeleton: {

modelPath: '../models/handskeleton/model.json',

},

},

};

Outputs

Result of humand.detect() is a single object that includes data for all enabled modules and all detected objects:

result = {

version: // <string> version string of the human library

face: // <array of detected objects>

[

{

confidence, // <number>

box, // <array [x, y, width, height]>

mesh, // <array of 3D points [x, y, z]> 468 base points & 10 iris points

annotations, // <list of object { landmark: array of points }> 32 base annotated landmarks & 2 iris annotations

iris, // <number> relative distance of iris to camera, multiple by focal lenght to get actual distance

age, // <number> estimated age

gender, // <string> 'male', 'female'

}

],

body: // <array of detected objects>

[

{

score, // <number>,

keypoints, // <array of 2D landmarks [ score, landmark, position [x, y] ]> 17 annotated landmarks

}

],

hand: // <array of detected objects>

[

{

confidence, // <number>,

box, // <array [x, y, width, height]>,

landmarks, // <array of 3D points [x, y,z]> 21 points

annotations, // <array of 3D landmarks [ landmark: <array of points> ]> 5 annotated landmakrs

}

],

emotion: // <array of emotions>

[

{

score, // <number> probabily of emotion

emotion, // <string> 'angry', 'discust', 'fear', 'happy', 'sad', 'surpise', 'neutral'

}

],

performance = { // performance data of last execution for each module measuredin miliseconds

body,

hand,

face,

agegender,

emotion,

total,

}

}

Build

If you want to modify the library and perform a full rebuild:

clone repository, install dependencies, check for errors and run full rebuild from which creates bundles from /src into /dist:

git clone https://github.com/vladmandic/human

cd human

npm install # installs all project dependencies

npm run lint

npm run build

Project is written in pure JavaScript ECMAScript version 2020

Only project depdendency is @tensorflow/tfjs Development dependencies are eslint used for code linting and esbuild used for IIFE and ESM script bundling

Performance

Performance will vary depending on your hardware, but also on number of resolution of input video/image, enabled modules as well as their parameters

For example, on a desktop with a low-end nVidia GTX1050 it can perform multiple face detections at 60+ FPS, but drops to 10 FPS on a medium complex images if all modules are enabled

Performance per module:

- Enabled all: 10 FPS

- Face Detect: 80 FPS (standalone)

- Face Geometry: 30 FPS (includes face detect)

- Face Iris: 25 FPS (includes face detect and face geometry)

- Age: 60 FPS (includes face detect)

- Gender: 60 FPS (includes face detect)

- Emotion: 60 FPS (includes face detect)

- Hand: 40 FPS (standalone)

- Body: 50 FPS (standalone)

Library can also be used on mobile devices

Credits

- Face Detection: MediaPipe BlazeFace

- Facial Spacial Geometry: MediaPipe FaceMesh

- Eye Iris Details: MediaPipe Iris

- Hand Detection & Skeleton: MediaPipe HandPose

- Body Pose Detection: PoseNet

- Age & Gender Prediction: SSR-Net

- Emotion Prediction: Oarriaga

Todo

- Tweak default models, parameters and factorization for age/gender/emotion/blazeface

- Add sample images